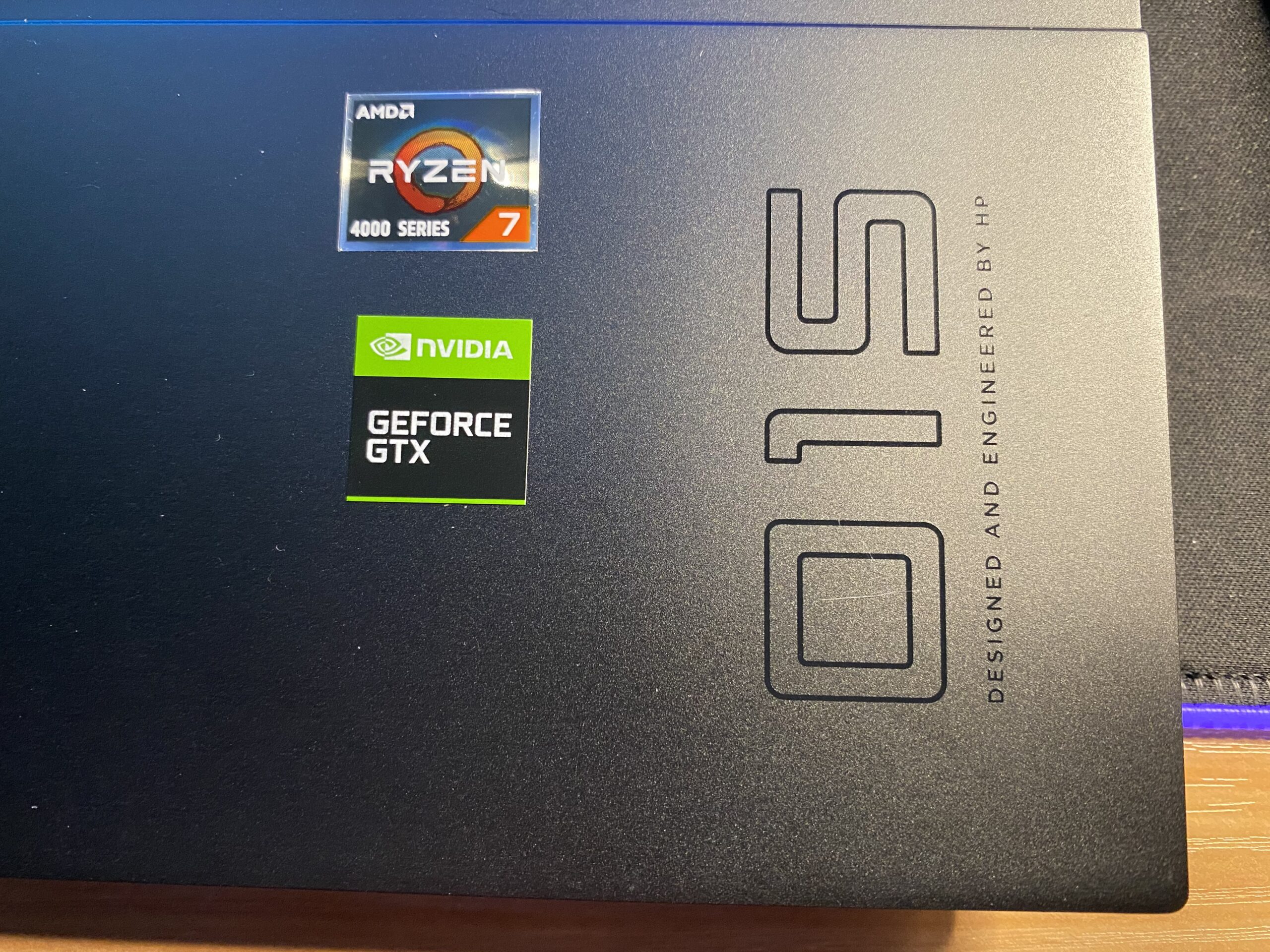

HP Omen 15 2020 Performance Review (Ryzen Edition).. Amazing or ???

I ended up returning the Asus G14. There were a number of things that weren't apparent at first or didn't seem to be an issue that, after talking to friends and listening to other reviews, made me decide to try something else. Unfortunately, for the smaller size gaming...

Read More ⟶

Review – Asus G14 Zephyrus Model:GA401IV-BR9N6

While most of my finds are bargains, refurbs etc., this laptop is a bit different- I paid full price. In general, I don't like paying full price for a system but in this case, the reviews I saw were so overwhelmingly good, I bought it. Here is the ad from Best Buy. The...

Read More ⟶

Dell Outlet – Alienware Aurora R7 bargain find

While I was benchmarking the ASUS ROG, I was on Dell.com outlet and looking at their deals for Memorial Day. Lo and behold I found one! The system I found was the following:

Alienware Aurora R7

i7-8700 (6c/12t)

16 GB RAM (2x8 Hynix 2666Mhz)

256 GB (PC401 Hynix NVMe...

Read More ⟶

New Gaming Rig – ASUS ROG Zephyrus G GA502

I am forever in search of deals when it comes to computer hardware. This is possibly one of the reasons why I have 7 Dell servers sitting in a half-rack in my house (leading to disapproving stares from the wife). When I saw this laptop in a sale on Bestbuy.com ($1050),...

Read More ⟶

Intro to MongoDB (part 2)

When I left off last part, we were discussing MongoDB's availability features. We will start next on:

Scalability - We've gone over replica sets in availability. For those who come from more of an infrastructure/hardware background. This is very close to something...

Read More ⟶

Intro to MongoDB (part-1)

I don't like feeling dumb. I know this is a weird way to start a blog post. I detest feeling out of my element and inadequate. As the tech world continues to inexorably advance – exponentially even, the likelihood that I will keep running into those feelings becomes...

Read More ⟶

3 Months in…..

Slightly over 3 months in now at my first role as Technical Marketing Engineer with Rubrik, Inc and I couldn't be happier. The job itself is new things often enough, to where I don't feel bored. And my team is amazing-I couldn't ask for a more supportive group of...

Read More ⟶

New Beginnings

It was with a bit of regret and a small bit of fear that I turned in my 2 weeks' notice last week. Even though I technically left Dell 2.5 yrs. ago, Dell wasn't done with me yet and decided to buy the company I moved to. So essentially, I worked for Dell in some...

Read More ⟶

Tales of a Small Business Server restore……

I know that many of you have gone through your own harrowing tales of trying to bring environments back online. I always enjoy hearing experiences of these. Why? Because these are where learning takes place. Problems are found and solutions have to be found. While my...

Read More ⟶