VMware VCP 2020 vSphere 7 Edition Exam Prep Guide (pt.5)

Section 6 – Troubleshooting and Repairing - There are no testable objectives for this section.

Since this isn't a testable section, we won't cover this. If there is enough of an ask, I may add a bit in here.

Section 7 – Administrative and Operational...

Read More ⟶

VMware VCP 2020 vSphere 7 Edition Exam Prep Guide (pt.4)

Section 5 – Performance-tuning, Optimization, Upgrades

Objective 5.1 – Identify resource pools use cases

The official description of a resource pool is a logical abstraction for the flexible management of resources (same exact definition as vSphere 6.x). My...

Read More ⟶

VMware VCP 2020 vSphere 7 Edition Exam Prep Guide (pt.3)

And we're back for post 3 of the VCP-DCV 2020. Section 3 is Planning and Design. VMware has no testable objectives for this section, so we won't write anything on it. Perhaps we may cover it in a future blog if enough are interested in it, on to Section 4.

Section 4...

Read More ⟶

VMware VCP 2020 vSphere 7 Edition Exam Prep Guide (pt.2)

Picking up where we left off, here is Section 2. Once again, this version has been shaken up quite a bit from previous VCP objectives; this section is a bit lighter than Section 1. Let's dig in.

Section 2 – VMware Products and Solutions

Objective 2.1 – Describe the...

Read More ⟶

VMware VCP 2020 vSphere 7 Edition Exam Prep Guide (pt.1)

Introduction

Hello again. My 2019 VCP Study Guide was well received, so, to help the community further, I decided to embark on another exam study guide with vSphere 7. This guide is exciting for me to write due to the many new things I'll get to learn myself, and I...

Read More ⟶

Creating a VI (Virtual Infrastructure) Cluster in VCF 4.0.1.1

I originally wanted to learn more about VMware Cloud Foundations but never had the time to. I recently (ahem COVID) found extra time to try new things and learn with my home lab. For the setup, I used the VMware Lab Constructor (downloaded here) to create VCF. After...

Read More ⟶

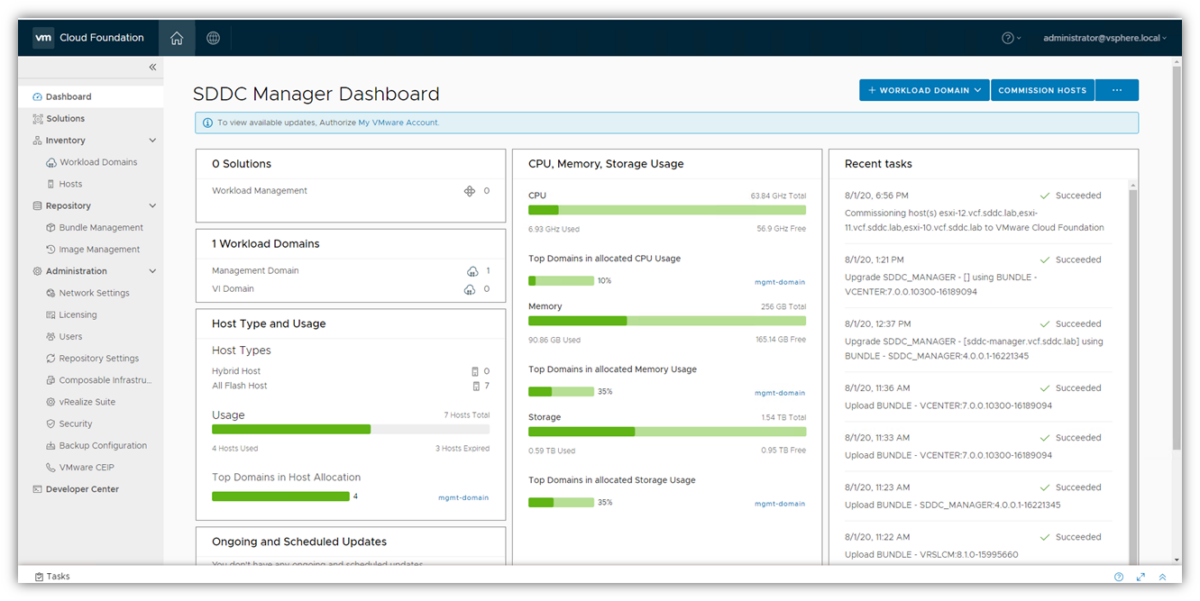

VMware Cloud Foundations 4.0.1: Problems with SDDC Manager refreshing

I've been doing some studying on VMware Cloud Foundations 4.0.1 and have it running in my lab. It seems a bit finicky at times I've noticed. One of the issues I've run into so far is that when I added 3 more hosts, everything seemed to be fine. I then wanted to add a...

Read More ⟶

Review – Asus G14 Zephyrus Model:GA401IV-BR9N6

While most of my finds are bargains, refurbs etc., this laptop is a bit different- I paid full price. In general, I don't like paying full price for a system but in this case, the reviews I saw were so overwhelmingly good, I bought it. Here is the ad from Best Buy. The...

Read More ⟶

VCP 2019 Study Guide in PDF format

VCP-2019-Study-Guide-1Download

Here is my full study guide in entirety for download. Feel free to let me know if there is something that needs correction etc. Hope this helps....

Read More ⟶

VCP 2019 Study Guide Section 7 (Final Section)

Section 7 – Administrative and Operational Tasks in a VMware vSphere Solution

Objective 7.1 – Manage virtual networking

I've gone over virtual networking a bit already. But there are two basic types of switches to manage in vSphere. Virtual Standard Switches and...

Read More ⟶